Dimensions of Intelligence

While already impressive, AI is still in its infancy. Gain a "mere mortals" perspective on what enables AI and where it's all heading from here.

There is little doubt that #AI represents the transformative force of our lifetime. This is why 9 in 10 businesses are accelerating spend in AI with the vast majority declaring it their #1 investment priority.

While it’s near impossible to predict how much AI will reshape the business landscape, many have already achieved double-digit gains in productivity well above the overall 3% reported by the BLS in 2023.

While super impressive, AI is only in its infancy.

Let’s explore why.

To start, AI is an umbrella term. Much of today’s deployed AI are expert systems - the programmatic transfer of knowledge into rules-based systems. They lack the algorithmic “brains” known as machine learning (ML).

Furthermore, some 95% of today’s deployed ML relies on the least sophisticated techniques and are only capable of performing narrowly defined tasks. This will rapidly change as the more sophisticated AI techniques discussed in this article become mainstream.

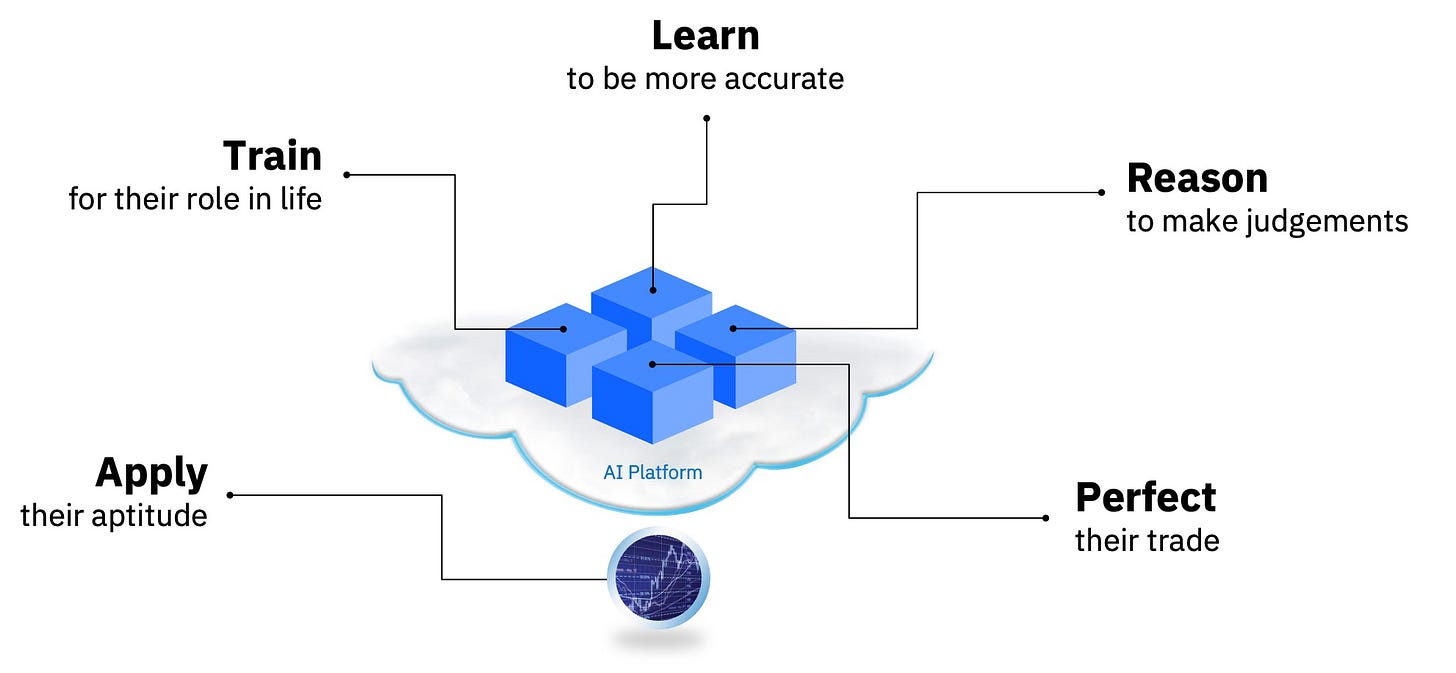

In its simplest form, there are five core dimensions of intelligence that enable the smarts in AI systems, which define how AI can:

As sophistication grows in each of these dimensions, it creates a multiplier effect of algorythmic “intelligence”. Understanding the various techniques in each dimensions will provide insight into the capabilities of future-state AI.

Let’s dive in!

Training

The first dimension defines the AI model’s role in life and trains it accordingly. There are three core methods:

(1) Trains with KNOWN data and KNOWN outcomes – In this entry level method, models are fed examples (data) where the correct outcome is known. You know what you are looking from historical evidence. They consume a labeled set of inputs and produce a labeled set of outputs with explicit direction on how to do so (supervised). For example, predict the optimal selling price for each of the 1,000 cars on my sales lot.

(2) Trains with UNKNOWN data and UNKNOWN outcomes – This method doesn’t rely on labeled data only, nor is it told what the correct outcomes are. Essentially, you don’t know what you are looking for because you don’t understand the data it feeds on. These models are trained to swim in a lake of data to make sense of it all without explicit direction (unsupervised). For example, predict an outcome of a legal case based on case law precedent.

(3) Trains with NO DATA and SELF-DETERMINES outcomes – This is the “dude, you on your own” method, the most sophisticated. The model self-trains by performing tasks without being fed a dataset nor given guidance. Through trial and error (experimentation) it evaluates the consequences of its actions and determines the best behavior based on self-generated data sets. Sound familiar, mere mortals? For example, an autonomous drone that takes aerial photos of wildlife movements.

Fun Fact: 30% of today’s ML is unsupervised (2), up from 10% last year

Learning

The second dimension defines how AI learns by iteratively re-programming itself overtime, of which there are three core levels of sophistication.

(1) Learns from a SINGLE sequence of BINARY decisions – Most ML today employs this method, where models progress through a tree-like structure of yes/no decision-points to create a final prediction. Think of a decision tree where each decision is a “leaf” and each path forward a “branch”. They adapt their structures by evaluating which paths produce the most predictive powers. An example use can is a model that advises police how to safely respond to a crime in action.

(2) Learns from MULTIPLE sequences of BINARY decisions – As a more sophisticated version of the above, these models operate as a forest of decision trees that partner to create a prediction. Each tree takes a randomized subset of the input data to create sub-prediction, which are combined to create a final prediction. This generally results in better outcomes, especially when relying on very large datasets. For example, predicting a storm track’s effect on traffic five days in advance.

(3) Learns from LAYERS of interconnected VARIABLE decisions – This method mimics the human brain that is composed of vast layers of interconnected “neurons” that process, rank and propagate information in dynamic ways. Each “neuron” transforms its input data and activates the next best “neuron”, and so on and so forth, until an outcome is learned. This method, known loosely as a neural network, can scale in sophistication based on the transformations employed at each layer. For example, like ChatGPT, it can write a recommended legal strategy brief.

Fun Fact: Only 20% use neural networks (3), which will grow 2x in 2024.

Reasoning

The third dimension enables AI to make judgements and problem-solve based on what is considered to be true. Ultimately becoming more sophisticated by defining the what, how and why of an outcome.

(1) Reasons to predict the WHAT – The vast majority of today’s AI can only predict the “what” by correlating variables across a dataset, telling us how much one changes when others change. They can deduct specific observations from generalized information or induce general observations from specific information. Essentially, identifying patterns, associations and anomalies to best predict what will happen given certain conditions and previous “memories”. For example, to define the risk level of certain anomalies identified in IT operations.

(2) Reasons to predict the HOW – This method builds on correlative models to handle more complex and diverse problems. It uses statistical transformations and large multifeatured datasets to create a view of not only what will happen but “how” it knows it will happen. That is, it identifies influential factors, conditions and pathways to an outcome that correlative models alone cannot. For example, explaining how to win a demographic voting group in an election given current events.

(3) Reasons to predict the WHY – Ultimately, everyone wants to know why things happen, what can be done to change things, and the consequences of various interventions. Basically, this model identifies precise cause & effect relationships that can be modeled with “what if” scenarios. Humans are causal by nature, so AI needs to become causal if it wishes to collaboratively reason, explain, and make decisions with humans. This is at the heart of causal AI wave that is now emerging. For example, of many options, what should I do differently to retain my customers across my 13 retail stores.

Fun Fact: Causal AI is projected to grow at a 41% CAGR through 2030

Perfecting

This dimension relates to optimizing the lifeblood of AI, its data. Four key design principles that will help models perfect their trade include:

(1) The unreasonable EFFECTIVENESS of data – How do I weigh the relative importance of developing the AI model versus the dataset it feeds upon. It's been shown that the performance improvements related to algorithmic sophistication are relatively small compared improvements in the dataset (Peter Norvig, 2009).

(2) The wisdom of CROWDS – Do I source all available knowledge or just a subset considered to be the best? The former risks introducing greater disorder or randomness. The latter risks esotericism, the secret knowledge of a few. It's been shown that diversity over superiority improves outcomes. (Surowiecki, 2004).

(3) The capture of INTUITION – Is the capture of explicit knowledge enough?We know humans tap into tacit knowledge and intuition to perfect tasks. We also know these innate characteristics are highly difficult to express and hard to externalize as rules (Polanyi, 1966). Addressing is paradox can create enormous differentiation, as it fuses AI with expert and innate knowledge.

(4) The CASUALITY phenomenon – How do I minimize false-positives? We know an association or trend that appears in groups of data can change or disappear when the datasets are combined or extended due to improper causal interpretations (Simpson, 1951). This highlights the importance of adopting causal AI to open up today’s “black boxes” and expose the precise pathways through an AI model to an outcome.

Fun Fact: 8 in 10 AI failures are due to dataset architecture, not AI design.

Applying

The final dimension relates to how architectural designs have implications on the scope and application of the model’s intelligence.

(1) Send your data, I’ll get back to you – This is the predominate approach today where AI models are centralized on a cloud platform and fed data from standardized pipelines. This requires the aggregation and movement of data. While this enables greater control, it does reduce the dynamic nature of the AI system and the diversity of data inputs. In addition, it exasperates issues of performance, data privacy and the time-value-of-data. Collectively, this limits the ability of the AI system to progressively become more intelligent.

(2) Keep your data, I’ll come to you – A decentralized AI architecture offers greater sophistication especially when feeding off high-volume, dynamic, localized or time-dependent data streams. AI models are delivered to where the data lives, enabling them to train and learn (evolve) within the unique conditions of various use cases. This also mitigates performance, security and data privacy risks. And since data is the bloodline of AI and AI models evolve independently of dynamically evolving data, they become more intelligent.

(3) Lets collaborate for the good of all – Known loosely as SWARM intelligence, this approach builds on the decentralized model to drive collaborative learning and inferencing across a network of AI models. Within defined parameters and conditions, they feed upon each other to improve outcomes independently as well as collectively. Since each can also process and act on input from others, they are able to mimic the collective intelligence of many leveraging an array of diverse data streams.

Fun Fact: SWAM AI is projected to grow at a 40% CAGR to $1B by 2030.

Summary

Collectively, as sophistication grows in each of these four dimensions, AI systems will experience a multiplier effect of greater intelligence. This is why, among many things, we are truly in the infancy of AI’s impact, with more and more capable systems on the horizon.

It will not be long from now that AI systems will be able to truly reason, problem-solve, explain themselves, navigate the sensory world, and manipulate “objects” to help humans accomplish things. As models progress, AI promises to deliver more value per dollar invested than any technology we’ve seen so far, and likely by a lot.

We in for one hell of a ride into the future. Bet on that!