Causality in Agentic AI

There is a missing ingredient in today’s AI, which, when added, will make AI a truly indispensable partner in business and scale ROI over the long run.

This ingredient is also key to creating agentic AI systems, where networks of agents help humans make decisions, solve problems, and even act on their behalf. The missing ingredient is CAUSALITY and the science of WHY.

This article is the third in a series of articles on the emerging impact of Causal AI and will cover:

• The need for causality

• Causality in agentic systems

• Causal reasoning concepts

• What to do and when

Podcast

Click here to watch the discussion.

Research

Click here to read the full research note.

Summary

The advent of generative AI and Large Language Models (LLMs) sparked an acceleration of AI adoption among enterprises. It now represents the highest spending trajectory among all technology categories, nearly 2x all others.

However, most businesses now envision applying more advanced AI techniques to complement generative AI, empowering more transformative, higher-ROI use cases that automate workflows and enhance productivity.

To do so, many businesses are creating new Agentic AI systems that unleash networks of AI agents to improve their organization’s decision-making and problem-solving skills. In many cases, these agents can even act autonomously when human intervention is not feasible or warranted.

Given its highly intriguing promise of value, it’s likely that 2025 will become the year of agentic AI. According to a Capgemini survey of 1,100 executives at large enterprises, 82% plan to deploy AI agents within the next three years, growing from 10% today.

The trend toward Agentic AI does beg the question — what is the difference between AI assistants (or chatbots), AI agents, and Agentic AI?

The general consensus is that they differ based on their use case maturity:

AI assistants (or chatbots) assist users in executing tasks, retrieving information, or creating some type of content. They are dependent on user input and act on explicit commands.

AI agents assist users in achieving goals by interacting within a broader environment of considerations. They sense, learn, and adapt based on human and algorithmic feedback loops to help people make better decisions without direct guidance on how to do so.

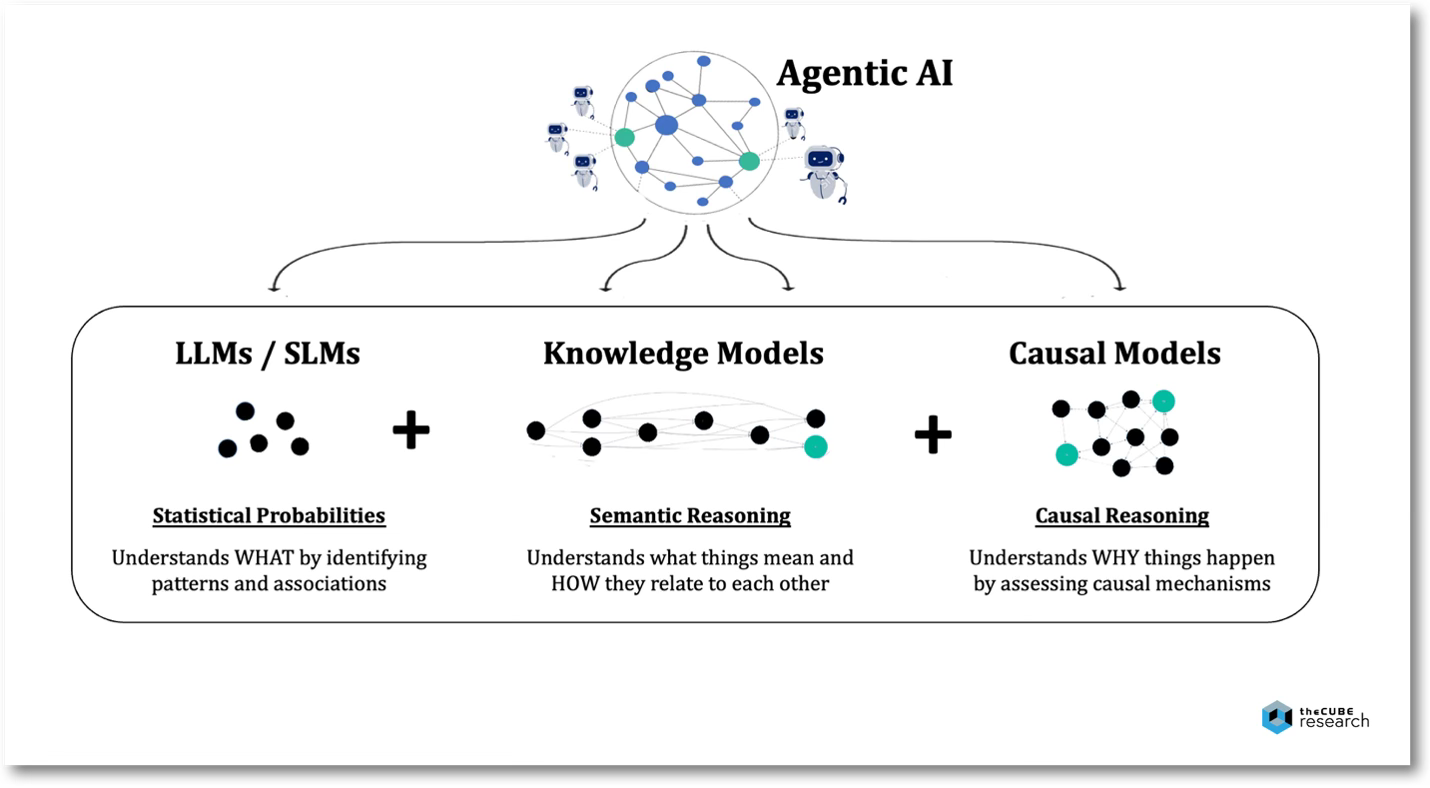

Agentic AI systems assist users and organizations in achieving goals that are too sophisticated for a single agent to handle. They do so by enabling multiple agents with their own sets of goals, behaviors, policies, and knowledge to interact.

Simply put, assistants automate tasks while agents achieve goals.

The key to Agentic AI is their ability to reason through a series of steps to understand the nature of the issue, current conditions, possible approaches, consequences of alternate actions, and how to construct an effective solution.

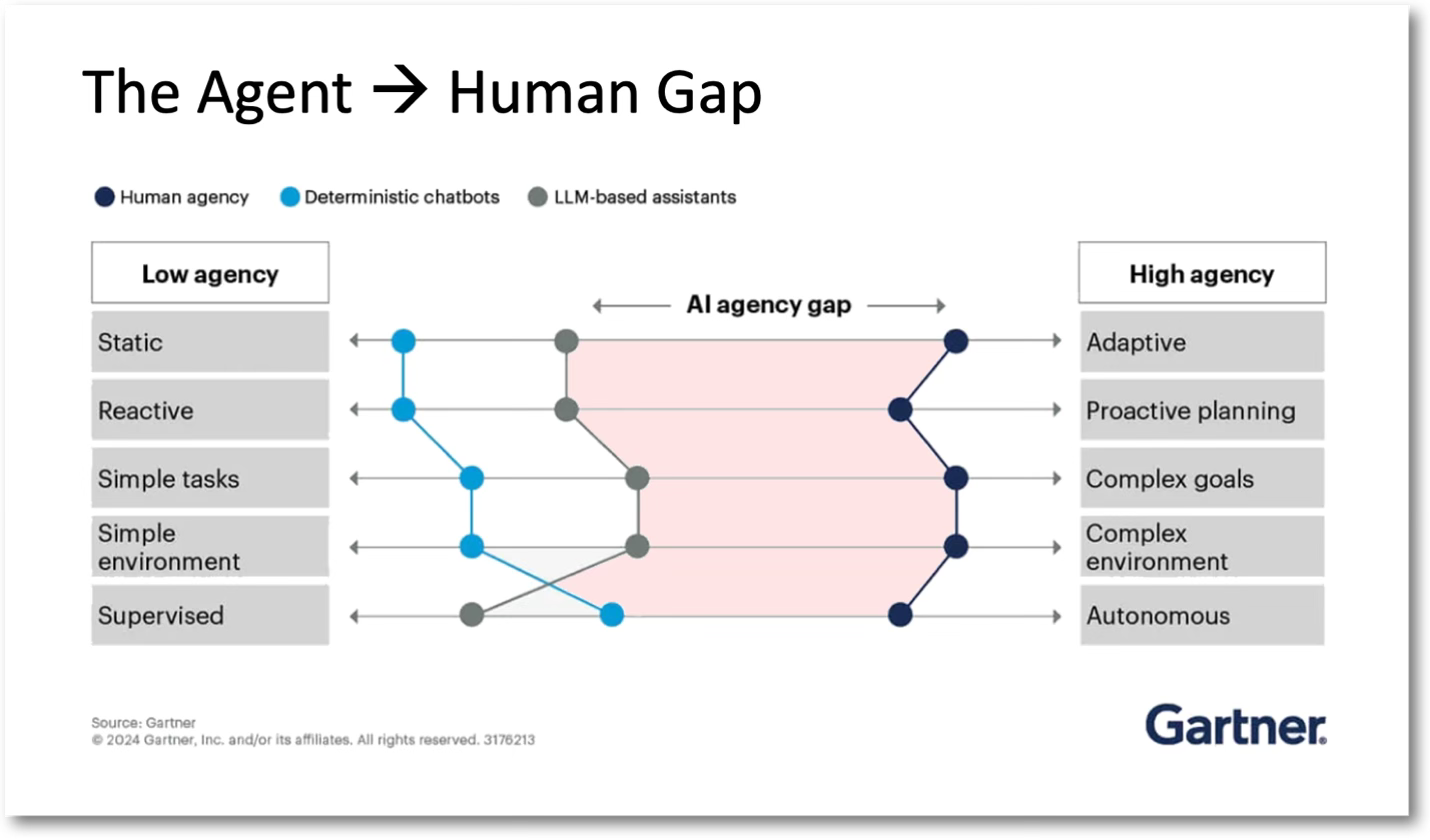

However, as Gartner Group points out in its agentic AI research, a big gap exists between current generative AI and LLM-based assistants and the promise of AI agents. Today’s AI struggles to understand complex goals, adapt to changing conditions, proactively plan, and act more autonomously.

Causality in Agentic Systems

One of the most critical missing ingredients in closing the “AI agency gap” is causality and the science of why things happen.

Causality will enable businesses to do more than create predictions, generate content, identify patterns, and isolate anomalies. They will be able to play out countless scenarios to understand the consequences of various actions, explain their business’s causal drivers, make better decisions, and analytically problem-solve. AI agents will be better equipped to comprehend ambiguous goals and take action to accomplish those goals.

An array of emerging causal AI methods and tools are now supplying data scientists with new “ingredients” for their AI recipe books, empowering them to infuse an understanding of cause-and-effect into their AI systems.

Interventions: determine the consequences of alternate actions

Counterfactuals: evaluate alternatives to the current factual state

Root Causes: detect and rank causal drivers of an outcome

Confounders: identify irrelevant, misleading, or hidden influences

Intuition: capture expertise, know-how and known conditions

Pathways: understand interrelated actions to achieve outcomes

Rationalizations: explain why certain actions are better than others

Collectively, these new “ingredients” promise to enable AI systems to progressively deliver higher degrees of causal reasoning to close the gap in today’s LLM limitations and future-state agentic systems.

It’s my view that causal reasoning will become an integral component of future-state agentic systems. These systems will orchestrate an ecosystem of collaborating AI agents and a mix of predictive, generative, and casual models to help people problem-solve and make better decisions.

Since Agentic AI aims to help humans (and organizations) achieve goals. In that case, it’s my view that differentiation and ROI expansion will grow as a function of the degree of causality infused into Agentic AI systems.

Causal Reasoning Concepts

People, businesses, and markets rely heavily on the concept of causality to function. It allows us to explain events, make decisions, plan, problem-solve, adapt to change, and guide our actions based on consequences.

Given that humans are causal by nature, AI must also become causal by nature. And, in turn, create a truly collaborative experience between humans and machines.

In other words, without casual reasoning, AI will lack the ability to mimic how people think or how the world works, as nothing can happen or exist without its causes.

More specifically, it will help businesses better know:

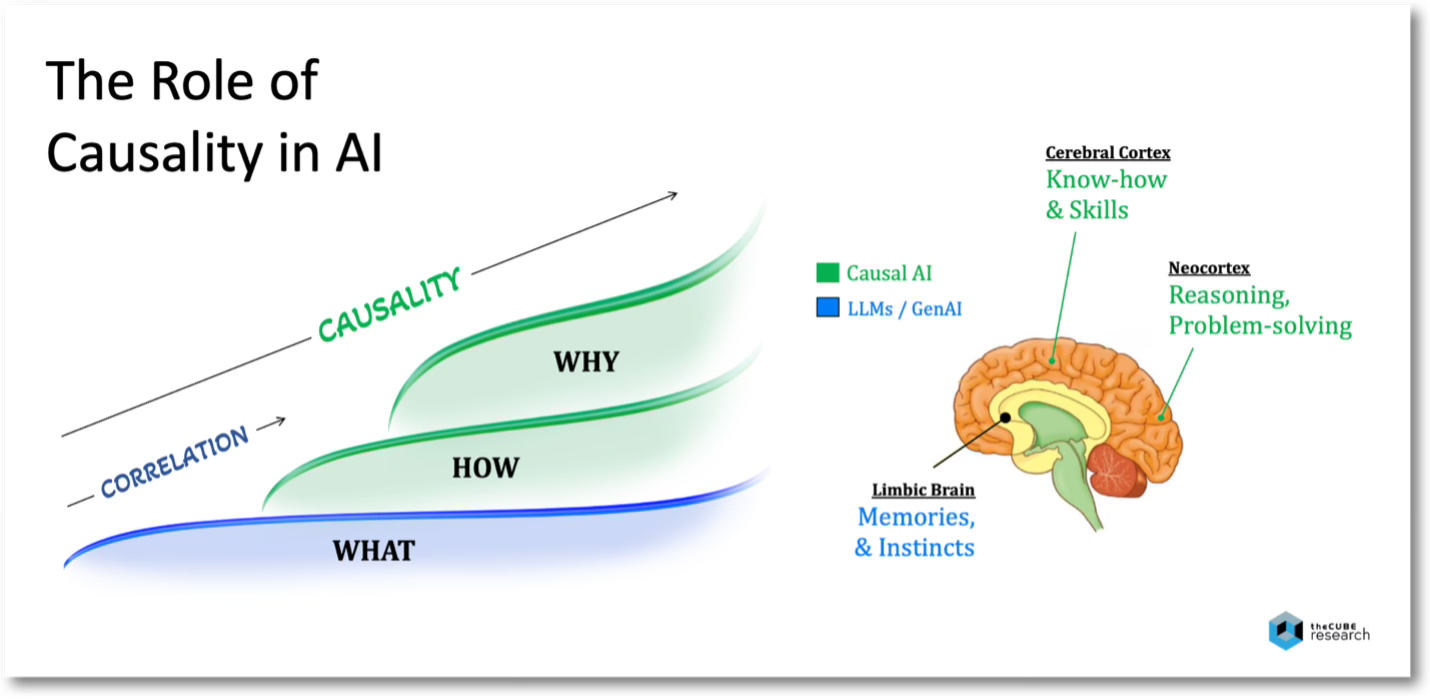

WHAT to do — Today’s GenAI and LLM designs correlate variables across datasets, telling us how much one changes when others change. They essentially swim in massive lakes of data to identify patterns, associations, and anomalies that are then statistically processed to predict an outcome or generate content. LLMs operate similarly to the limbic brain, which drives instinctive actions based on memories, which is what makes them good at determining the “what,” automating tasks, and creating content.

HOW to do it — Beyond predicting or generating an outcome (the “what”), businesses also want to understand how to accomplish a goal or how an outcome was produced. This requires advanced transformations integrating correlative patterns, influential factors, neural paths, and causal mechanisms to de-code the “HOW.” Have you ever tried to explain something without invoking cause & effect? Think of causal AI techniques as simulating the cerebral cortex that’s responsible for encoding explicit memories into skills and tacit know-how. This is key to recommending prescriptive action paths that are trusted, transparent, and explainable.

WHY do it — Businesses will also want AI to help them evaluate the consequences of the various actions they could take to improve outcomes. That is, why is one set of actions better than another? For AI to truly help humans reason and problem-solve, it must algorithmically understand precise cause-and-effect relationships. That is, to understand the dynamics of why things happen so that people can explore various “what-if” propositions. This mimics the neocortex, which drives higher-order reasoning, such as decision-making, planning, and perception.

From a technical perspective, today’s predictive AI models operate on brute force, processing immense amounts of data through numerous layers of parameters and transformations. This involves decoding complex sequences to determine the next best token until it reaches a final outcome.

But no matter how big or sophisticated today’s LLMs and predictive AI models are, they still establish only statistical correlations (probabilities) between behaviors or events and an outcome. However, that is very different from saying that the outcome happened because of the behaviors or events. Correlation doesn’t imply causation. There can be correlation but not causation. Equating them risks creating an incubator for hallucinations, bias, and drawing incorrect conclusions based on incorrect assumptions.

In addition, correlation-driven AI systems generally operate in mostly static environments because they rely on statistical correlations and patterns derived from historical data. They often assume that the relationships within the observed patterns will remain stable over time. For AI systems to adapt and respond to changing conditions, they must understand the underlying causes driving those relationships.

This challenge becomes even more apparent when LLMs are presented with more ambiguous, goal-oriented prompts, which are at the heart of Agentic AI’s promise of value.

A recent study by Apple explored the limitations of LLMs to reason, finding that even state-of-the-art LLMs can experience up to a 65% decline in accuracy due to difficulties in discerning pertinent information and processing problems. The findings illustrate the risks of relying on an LLM for problem-solving as it is incapable of genuine logical reasoning.

While LLMs typically forecast or predict potential outcomes based on historical data, Causal AI goes a step further by elucidating why something happens and how various factors influence each other. In addition, Causal AI can understand statistical probabilities and how those probabilities change when the world around them changes, whether through intervention, creativity, or evolving conditions.

This is crucial because, without causality, AI agents will only perform well if your future resembles the past, which is not the reality of inherently dynamic business. After all, businesses rely on people to make decisions and act, and those people work within dynamic environments.

This is why, in part, the 2024 Gartner AI Hype Cycle predicted that Causal AI would become a “high impact” technology in the 2–5 year timeframe, stating:

“The next step in AI requires causal AI. A composite AI approach that complements GenAI with Causal AI offers a promising avenue to bring AI to a higher level.”

I couldn’t agree more.

What to Do

Perhaps the time is now to start preparing, especially for those who are pursuing the promise of agentic AI. I’d recommend you:

Build competency in both agentic and causal AI

Evaluate the impact of causality on your use cases

Engage with the ecosystem of pioneering vendors

Experiment with the technology and build skills

I’d also recommend watching the following podcasts:

From LLMs to SLMs to SAMs: How Agents Are Redefining AI

OpenAI Advances AI Reasoning, But The Journey Has Only Begun

Stay tuned for the next in this series of research notes on the advent of Causal AI, where I’ll cover an array of real-world use cases and the ROI achievements.

Thanks for reading. Feedback is always appreciated.

As always, contact me on LinkedIn if i can be of help.